A new study has replicated the findings from a two year old study which had concluded that gayface is real and machine learning algorithms can accurately predict from facial characteristics alone which human subjects are gay or straight.

From the abstract of the more recent study,

Recent research used machine learning methods to predict a persons sexual orientation from their photograph (Wang and Kosinski, 2017). To verify this result, two of these models are replicated, one based on a deep neural network (DNN) and one on facial morphology (FM). Using a new dataset of 20,910 photographs from dating websites, the ability to predict sexual orientation is confirmed (DNN accuracy male 68%, female 77%, FM male 62%, female 72%). To investigate whether facial features such as brightness or predominant colours are predictive of sexual orientation, a new model trained on highly blurred facial images was created. This model was also able to predict sexual orientation (male 63%, female 72%). The tested models are invariant to intentional changes to a subjects makeup, eyewear, facial hair and head pose (angle that the photograph is taken at). It is shown that the head pose is not correlated with sexual orientation. While demonstrating that dating profile images carry rich information about sexual orientation these results leave open the question of how much is determined by facial morphology and how much by differences in grooming, presentation and lifestyle. The advent of new technology that is able to detect sexual orientation in this way may have serious implications for the privacy and safety of gay men and women.

A description of Deep Neural Networks and Facial Morphology,

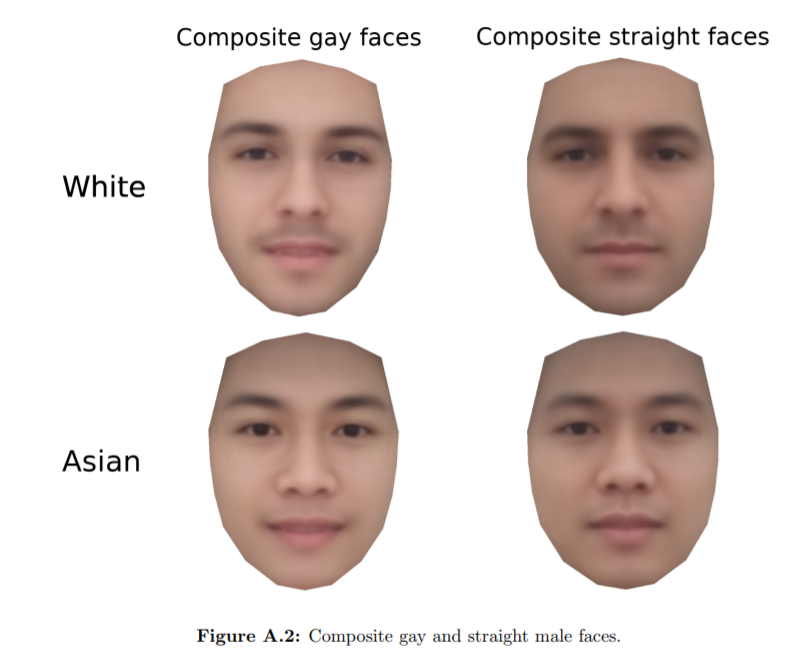

Two of their models used ML techniques to predict sexual orientation from a photograph of a face. One used deep neural networks (DNN) [1, Study 1a] to extract features from the cropped facial image (see Figure 1.1). The second study used only facial morphology [1, Study 3]. Facial morphology refers to the shape and position of the main facial features (such as eyes and nose) and the outline of the face (see Figure 1.2 for an example of the information available in the facial morphology studies). The images were gathered from online dating profiles and from Facebook. The data was limited to white individuals from the United States.

Briefly, DNN is a zoomed-in version of facial morphology analysis. One wonders if the gayface researchers only studied White faces for fear of offending the thin-skinned POCs.

For comparison, W&K [1] also ran an experiment in which they tested humans’ ability to detect sexual orientation from the same photographs. They found that humans are able to predict sexual orientation with modest success, achieving an accuracy measured by the Area Under the Curve (AUC) of AUC=.61 for male images and AUC=.54 for female images. Both their ML models outperformed the humans. The deep learning classifier had an accuracy of AUC=.81 for males and AUC=.71 for females [1, Study 1a]. The facial morphology classifier scored AUC=.85 for males and AUC=.70 for females [1, Study 4].

Humans have pretty good gaydar, but AI beats them. Coincidentally, AI is more racist than humans.

I suspect that human gaydar accuracy would improve if only urbanites who lived among lots of gays were studied. Gayface is one of those things — like Specialface — that you can’t miss once you’ve become accustomed to seeing it and familiar with its peculiarities. Flyovers who rarely see a gay or a [special] can have trouble identifying some of the marginal cases, which is why, for instance, South Carolinians missed Lindsey Graham’s light loafers and why so many evangelicals can’t tell that their preachers are obviously gay and/or low T cucks.

W&K note that their second model can predict sexual orientation from the individual components of the face, such as the contour of the face or the mouth. In particular, the facial contour predicts sexual orientation with an accuracy of AUC=.75 for men and AUC=.63 for women. Based on this outcome, W&K make the claim that this validates a theory of sexual orientation called prenatal hormone theory (PHT) [4, 5, 6]. PHT predicts that gay people will have “gender-atypical” facial morphology due to their exposure to differing hormone environments in the womb. They argue that this finding is unlikely to be due to different styles of presentation or grooming because it is quite difficult to alter the contour of one’s face [1].

The researchers go on to explain that their new machine learning algorithm controlled for factors like photo angle, facial hair, makeup, and eyewear, and still accurately predicted sexual orientation from facial morphology, bolstering the hypothesis that gayface is the result of a prenatal influence on the developing fetus. Or: Swishiognomy is real.

FYI, in a tangential discovery that will shock no one, straight women have a greater preference for wearing makeup than do lesbians.

The composite male faces:

I bet every reader here could have easily identified the gayfaces from the chadfaces without knowing beforehand. There’s just something in the way gays loooooook…….

A prankster tried out the new GayI:

Ok, but the psychopath AI pinged loudly.

Was there ever any doubt?

Was there ever any doubt?

LOL

No moldbuggery here!

[crypto-donation-box]